The Pharma Software Users Group (SUG) Japan held a Single-Day Event (SDE) in Tokyo on 7 April 2025. This was the first face-to-face event since the pandemic, and took place in Chugai Pharmaceutical offices in central Tokyo. Daniel was fortunate to be able to attend in person and gave a presentation, and shares his experience and notes here. In addition, he also led an R package building workshop the day after the conference, which was kindly hosted by GSK in Tokyo. More on that below too.

I would like to thank the organizers, Yuichi Nakajima, Naoko Izumi and Mirai Kirihara, for the invitation and the great organization of the event. It was a pleasure to meet so many nice statisticians and programmers in person, and I am looking forward to the next events in Japan!

RCONIS presentation

I presented “Designing Clinical Trials in R with rpact and crmPack” and the slides are available here. If you wonder whether this is the same presentation as in Shanghai, then you are right - and also here this was the only presentation in English (all others were in Japanese). Nevertheless the audience was well engaged and interested. My main objectives were to raise awareness about the open source R packages rpact and crmPack. Plus it was a great opportunity to distribute hex stickers of the two package logos, as well as RCONIS stickers, too! For next time, I need to remember to bring more business cards too, because literally everyone gave me one and I did not bring enough to the conference.

Presentation notes

Here come a few notes that I took during the meeting. You can also find all the abstracts here.

Efforts Regarding the Use of Study Data at PMDA

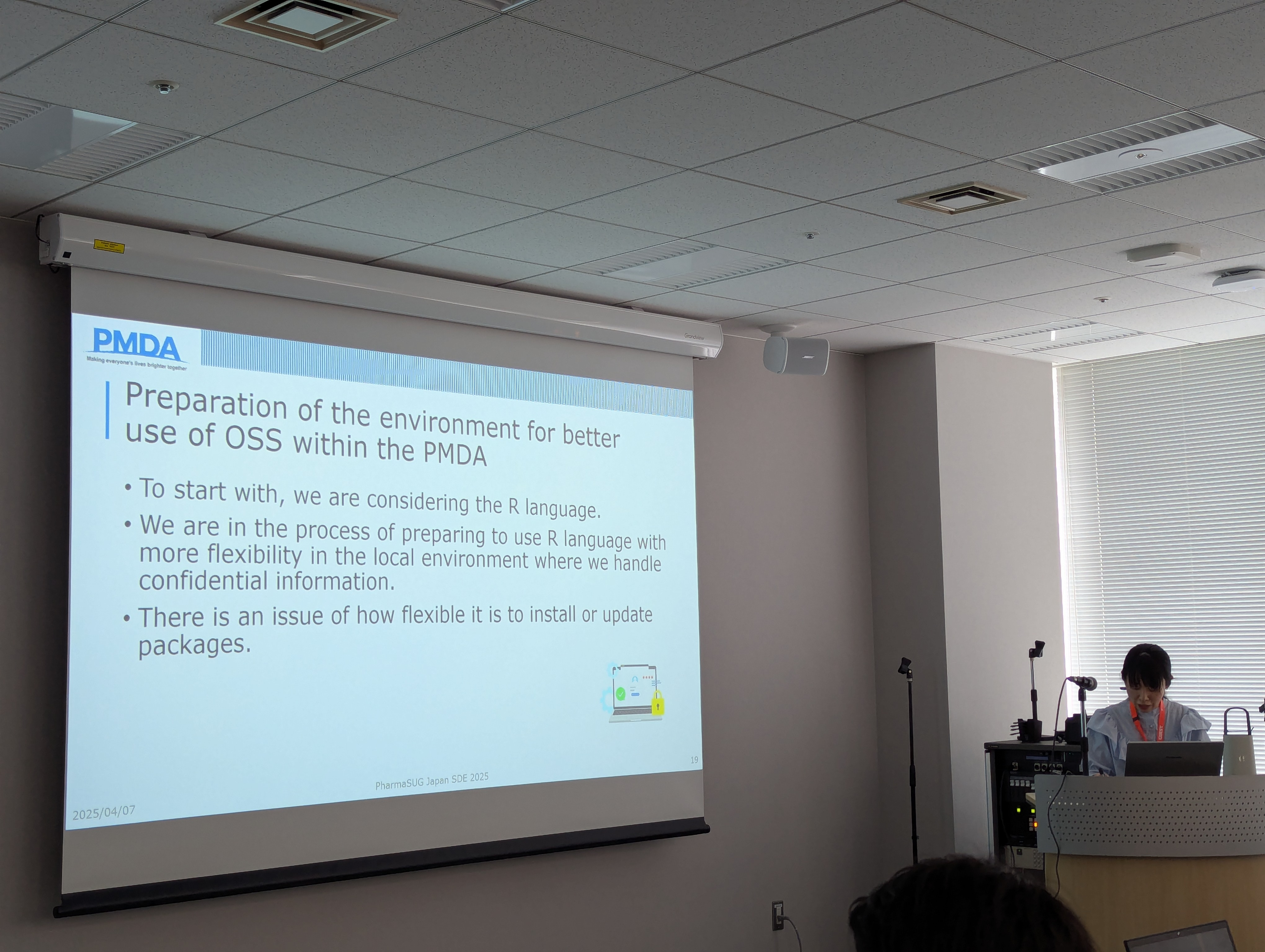

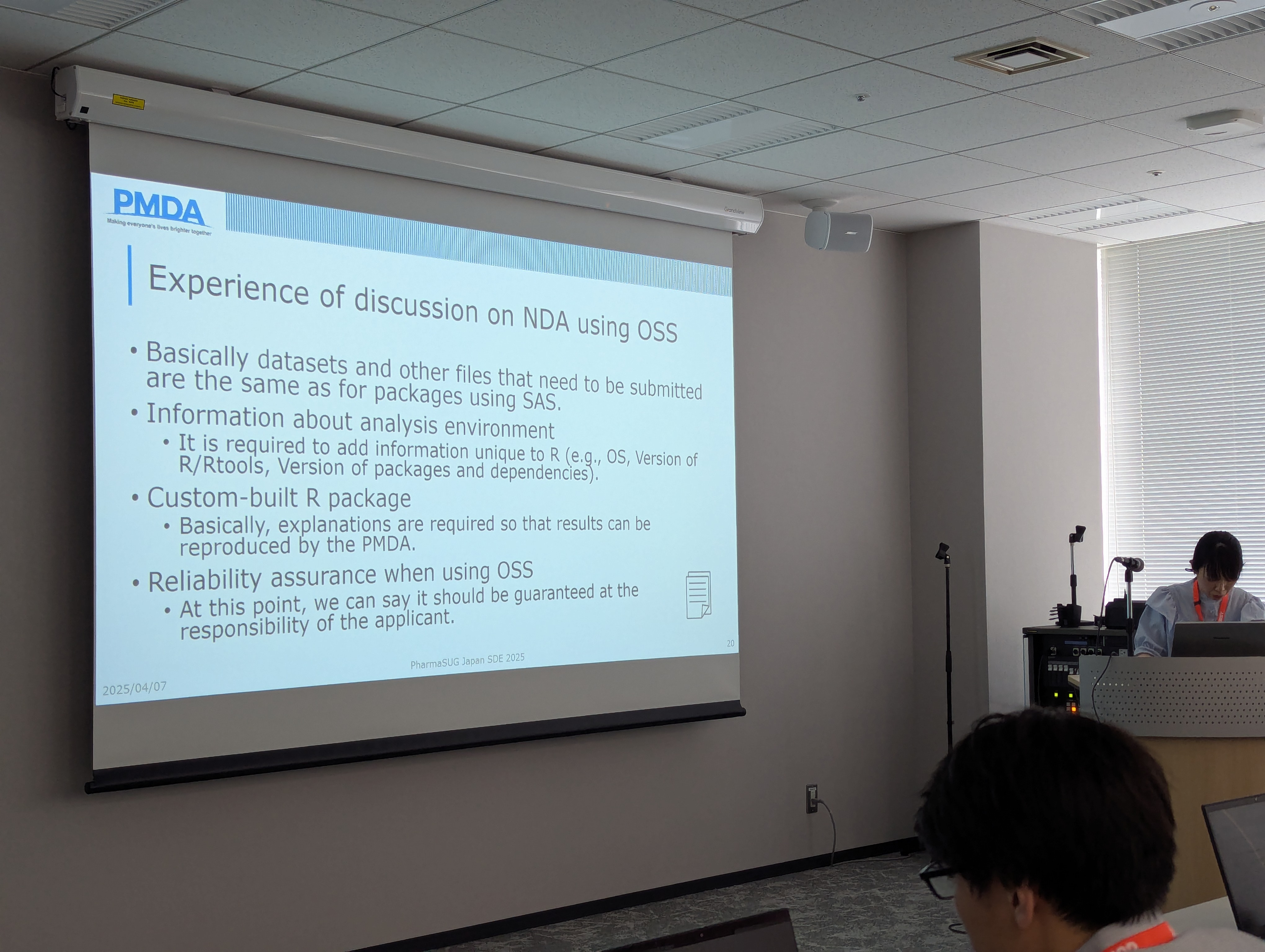

Hiromi Sugano from the Japanese regulatory authority (Pharmaceuticals and Medical Devices Agency, PMDA) presented this opening talk (slides). Since 2020, most NDAs are submitted to PMDA with electronic study data. Questions about this have decreased over time due to sufficient information being provided to sponsors. Internal data analyses help PMDA to be more efficient (i.e. reduce the amount of inquiries to sponsors). Sometimes, especially in complex analyses (e.g. multiple imputation) it can be difficult to find details for methods of the analyses (including primary!). I was particularly interested in the fact that she mentioned open source a couple of times during her presentation. PMDA is preparing for submissions with open source software (OSS), and the future tasks also include OSS. It seems that .xpt is still used as the standard data exchange standard format. R package installation and update are frequent issues. The renv set of details (which R version, which packages, etc.) needs to be supplied by the applicant. Still good to hear that PMDA would like to “continue to consider” the use of OSS in NDA submissions.

Here are a few key slides she presented:

CDISC Open Rules Engine (CORE)

Hajime Shimizu from ICON presented about the CDISC Open Rules Engine (CORE) (slides). This is an open source project by the CDISC Open Source Alliance (COSA) and is available on GitHub at github.com/cdisc-org/cdisc-rules-engine. It provides a reference implementation for checking the CDISC rules compliance of CDISC datasets. There were two major motivations for the CORE project:

- Rules were not extracted or managed by CDISC during the first few years of SDTM standard

- Different check tools provided different results, and this was then followed by community based agreement rather than official expectations from CDISC

The idea for CORE is that any CDISC deliverable can be checked and validated by the CORE tool. One current issue is that PMDA rules are not included yet at the moment.

The project is licensed under an MIT license, and several developers are working on it, sponsored by COSA.

Modernizing Software and the Evolution of Linked Data in the Pharmaceutical Industry

Takahiro Shibata from Altair Engineering presented this talk (slides). He started with a little history about the Altair company, which was established 1985 in Michigan, U.S. (and thus is almost the same age as me!). It was listed on the NASDAQ exchange, and has 3 proprietary platforms: simulation & design, AI & analytics, HPC & cloud. However, on 26 March 2025 (so just less than 2 weeks before this talk) Siemens acquired Altair for $10bn USD - and so it is no longer an independent company now. He presented two challenges:

- Inconsistent data formats and semantics

- Legacy systems

For the first challenge, the solution is semantic linkage of data. This is not the same as knowledge graphs (KG), for which the interest has increased in combination with LLMs in the last few years. He presented the Gartner Impact Radar for 2024 (see e.g. here). The Altair RapidMiner platform is a solution for this linkage, includes also a chat interface and has benefits vs. a pure LLM, in particular it integrates database information from internal private sources. As an example Takahiro showed a video from Merck how they retrieved information about design a trial and recruitment options (link).

For the second challenge, modernization of the software stack including OSS can help. The so-called “digital cliff” has arrived now, and there is real risk of hefty economic losses when not addressing the modernization - in Japan but not only there I presume. He presented Altair SAS Language Compiler (SLC), which also has a “personal edition” available for free download here. This can help to integrate legacy SAS programs in modern workflows and environments.

Lunch break

While this was not a presentation, we had nice Bento boxes delivered to the conference room, which is worth sharing:

Then directly after lunch was my presentation (see above).

Examples of R Shiny Usage in Clinical Development

Hiroaki Fukuda from MSD presented this talk (slides). He started with the workflow, recommending that you should always consider the objective of the Shiny app first: Is it increasing the quality, productivity or preventing mistakes? Afterwards one should investigate the feasibility of the project: For example, test with a core/challenging function part, in order to estimate the difficulty/effort. He noted that with GenAI, it is not that hard to start, since the GenAI also supports generation of HTML and JavaScript code for advanced user interfaces. For production implementation, he recommends an agile development with frequent releases. Other considerations include which R packages to use in the backbone and which code repository to work in. He continued by sharing 3 examples:

- Sample size calculator for the Japanese population, based on the input from the overall population

pptxslidedeck generator (fromrtffiles as input)- Clinical trial results viewer

Other ideas he mentioned for future apps include a data review tool and a project dashboard.

R Package Management at Novartis

Shunsuke Goto from Novartis presented this talk (slides). As an intro, he discussed the attributes that we need from good R packages, respectively why we need to manage R packages at all:

- Reliability: Does the package produce correct results?

- Consistency: Do different versions produce the same result?

- Compatibility: Does the package work with other dependencies with different versions?

Therefore, we need to assess the risk and validate packages based on their risk, manage the versions of packages and also their dependencies. The risk is categorized by an internal R package called brave. In order to reduce the risk, e.g. the number of downloads per month, publication in a journal etc. can be taken into account. The Novartis workflow has the following features. The versions are locked in the global environment. However, ad-hoc installations are also possible if urgently needed - either for project or for exploratory use cases. Upgrades of package versions, as well as addition of packages for the next release are standard. Regarding the validation, there are three qualification steps:

- IQ: Installation qualification

- OQ: Operational qualification

- PQ: Performance qualification

Both IQ and OQ are done by the IT team, while PQ is done by the user, and reviewed by a governance team for medium or high risk packages. A package management tool also allows ad-hoc local installation, where IQ and OQ tests are automatically executed, and optional PQ test results are stored in the project folder (only for project use case, not for exploratory).

Creating Interactive Web Applications with Python (streamlit)

Taku Sakaue from Chugai presented this talk (slides). Streamlit is a powerful Python library which can produce interactive web applications. You can publish a web app without deploying on a web server, by using Streamlit Cloud (link). Also a local installation of Streamlit is easy via pip. The code of Streamlit apps does not separate the UI and backend, which is different from Shiny (for R or for Python). See the Posit page here for a comparison of the two frameworks. Posit mentions that speed can be an issue with Streamlit apps, because the whole app code is executed upon every UI change.

Development of AI-SAS for RWE

Takuji Komeda from Shionogi presented this talk (slides). The AI-SAS tool has helped his company to reduce the time needed for clinical trial analysis. Now they also wanted to apply it to RWD. Challenges included vendor dependent raw data format, millions of cases/rows in a data set, unorganized training data, confounding bias etc (statistical limitations beyond programming problems). Their idea was now to first map the RWD to a standard common data model (CDM) format, and then let AI-SAS tool help to write the SAS programs to analyze the centralized CDM data. One challenge with Japanese CDM in particular was that the disease code cannot be converted to the OMOP CDM. He described the following workflow:

- Create a concept sheet, which is a simple table of metadata

- AI-SAS produces the ADS specification, same for TFL specification (these are both Excel sheets)

- Create ADS and TFL SAS program code via AI-SAS

- Run code / generate report summarizing the whole process

Considerations on Creation of Statistical Deliverables with Generative AI Tools

Ryo Nakaya from Takeda presented this talk (slides). He started with a cartoon generated by OpenAI’s ChatGPT, which had just added image creation to its 4o model. He continued with describing Agentic AI, which are autonomous systems which can decide and perform without human intervention. Examples include OpenAI deep research, Softbank AGI, Google Gemini Gem. The difference to usual LLMs is that they are goal-oriented and that they can do tasks autonomously (different levels: workflow-based, hybrid, fully autonomous). He mentioned Dify which is an open-source LLM app development platform. As an example he used agentic AI to create a QC program based on a specification: This was workflow-based, so starting with a spec check, then generating code, etc. He used the PhUSE Example ADaM spec for this. With a Python package he encapsulated functions with which the LLM was then prompted to generate the code. He used Claude Sonnet as the GenAI engine here.

Panel Discussion

The programming heads council then had a panel discussion, but unfortunately I could not understand anything besides some title slides 😆

I am looking forward to the next events in Japan, and I hope to be able to attend again!

R Package Workshop

The workshop on 8 April morning was kindly organized in the GSK offices in Tokyo by Yuichi Nakajima. I used a subset of the slides from useR 2024 to walk the 16 participants through the R package syntax and how to create documentation and unit tests. Participants were from various international and Japanese Pharma companies, including GSK, Sumitomo, Takeda, Chugai, J&J, Novartis, Sanofi, Eisai and Boehringer Ingelheim.

Here are a few photos:

It was as usual a lot of fun and I am glad that this openstatsware originated workshop now also made it to Japan!